Introducing the VCK5000 Versal™ Development Card for Superior AI Inference

We are excited to announce that for a limited time, you can purchase the VCK5000 Versal™ development card for AI inference for just $2,495! If you're developing AI inference workloads with pre-built Vitis™ AI accelerators, be sure to take advantage of this limited-time price.

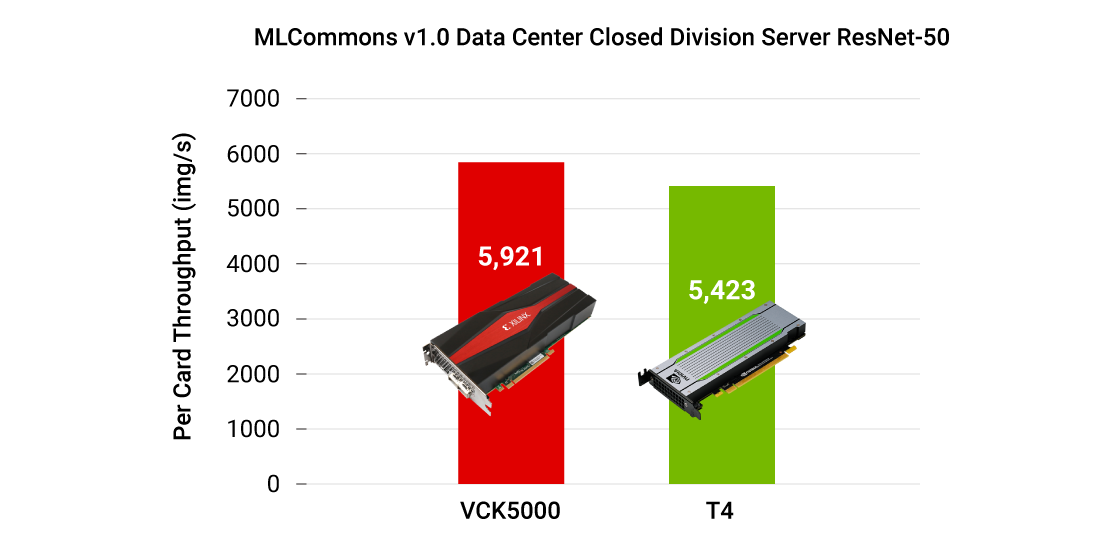

Delivering 100X greater compute power than today’s server-class CPUs and greater MLPerf inference performance than today’s server-class GPUs, the VCK5000 is an ideal development platform for cloud acceleration and edge computing applications.

The VCK5000 Versal development card features the Xilinx® Versal adaptive compute acceleration platform (ACAP) XCVC1902 device, manufactured on TSMC’s 7nm technology, which has 400 AI engines (AIE) running at 1.25 GHz, combined with 1,968 DSP engines in programmable logic (PL), providing up to 145TOPS (INT8) for AI inference. It also has a large amount of on-chip memory that can be used to store feature maps or intermediatory data to further increase the efficiency of AI inference.

In recent MLPerf™ Inference v1.0 results released by MLCommons™, the VCK5000 achieved 5,921 fps for Resnet50 in a server scenario and 6,257 fps in an offline scenario in the data center close division. That’s 9% higher performance vs. Nvidia’s T4 GPU card, which is widely used for AI inference in data center and on-premise, in the same benchmark.

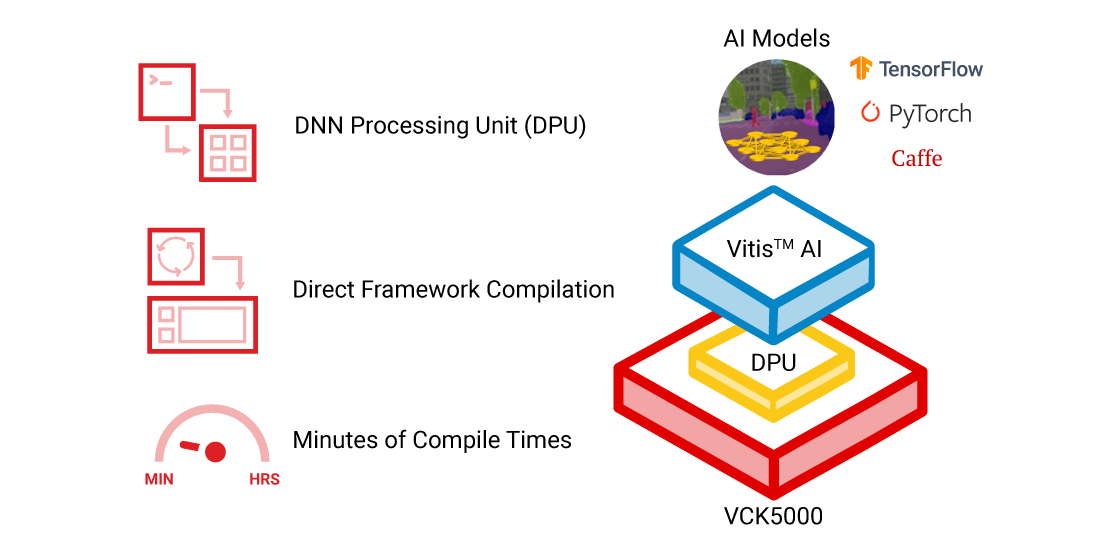

VCK5000 is fully supported by the Vitis™ AI 1.3.1 release with two DPU variants featuring 384 and 288 AIE cores, respectively. It can run more than 50 AI models from Vitis AI Model Zoo for different classification, detection, and segmentation tasks, including Resnet, Yolov3, SSD, Unet, Openpose, SalsaNext, and others.

Two demos – Natural Language Processing with BERT and MLPerf 1.0 Submission Resnet-50 -- have been built for the VCK5000. You can run these quickly to see performance results. Demo packages are available upon request.

The AI performance of the VCK5000 can be scalable with the number of AIE cores being used by the DPU. Depending on the performance need for your AI application, it can be deployed on the cloud or on-premises through the same software stack.

Next steps

Head over to the VCK5000 product page and fill out a purchase request to qualify for this limited-time pricing. This offer is only for AI inference workloads with pre-built Vitis AI accelerators and that will not be customizing hardware using Vitis or Vivado. Once your request is received, a sales representative will contact you for ordering instructions. For further questions, please contact vck5000-aie_sponsor@xilinx.com

For more information about Vitis AI and DPU, please visit https://github.com/Xilinx/Vitis-AI.