Implementing AI in an Embedded Project

Motivation

Field-programmable gate arrays (FPGAs) constitute a flexible hardware structure and often form an essential part in many different aspects of embedded systems, ranging from digital signal processing tasks up to high-performance I/Os and reliable safety-critical components. Due to their powerful parallel computing capabilities, they are also an obvious choice when it comes to the implementation of image processing applications.

Nowadays, many image analysis tasks such as object detection or image segmentation are realized through neural network approaches, which often clearly outperform well-known classical image processing algorithms in terms of accuracy. However, as a drawback, convolutional neural networks (CNNs) often have an extraordinary demand for computing power, arithmetic operations, and data storage.

Since resources on FPGAs are restricted, an optimized architecture and network design is highly necessary when implementing neural networks on FPGAs. For that, improvements are basically achieved by problem-driven and target-driven strategies. Problem-driven optimization covers aspects such as model selection or model refinement, while target-driven optimization aims at minimizing the inference time on the target platform.

This paper summarizes the efforts made by Solectrix, aiming at implementing an artificial intelligence (AI) approach on an embedded platform. Our work is encapsulated within the AI Ecosystem, allowing to specify and execute all required steps from model generation, training, model pruning, up to the final model deployment. To make the network inference work on Xilinx FPGAs, an intermediate format has to be generated which the Xilinx Deep Learning Processor Unit (DPU), a configurable IP accelerator dedicated for convolutional neural networks, can interpret and execute.

Experimental results show the functionality of the discussed AI Ecosystem and the impact of optimization techniques such as careful model shaping and model pruning.

Network Compression

As mentioned above, neural networks have revolutionized many areas within the field of computer vision and machine intelligence, including segmentation, classification, and detection tasks. Tuning neural networks for the best possible accuracy makes modern CNNs typically deep, complex, and computationally expensive. Consequently, deploying state-of-the-art CNNs on resource-constrained platforms, such as mobile phones or embedded devices, is a challenging task.

In literature, different approaches for compressing neural network structures and accelerating them on the final target device are discussed intensively.

While quantization is limiting the required bit depth, pruning methods try to identify and remove unimportant weights or filters [1]. In addition, mathematical concepts such as the Winograd transform [2] or low-rank matrix factorization can be used for further acceleration.

However, besides the above-mentioned methods, choosing a proper network structure plays a major role in efficient and real-time capable network design.

The following two sections give a brief overview over the network type and the pruning method that have been used for the results section.

Network Selection

This section gives a short description of our selected network structure. In general, the final network selection should always be project-specific and typically consider both, the application, as well as the chosen target platform. For a more detailed discussion and an overview of different network types, the reader is referred to the cited literature [3-11].

For our application, which is discussed in the results section, we chose a MobileNetV2 structure as a basic network type.

MobileNets, first published in 2017 [7], aims at making neural networks applicable on mobile or embedded target devices. The architecture is based on using depthwise separable convolutions in order to build lightweight deep neural networks. The idea of depthwise separable convolution is shown in the center and bottom images in Figure 1 while the standard convolution is depicted in the top image. As can be seen, depthwise separable convolution consists of a depthwise convolution and a pointwise convolution. Now, for a given feature map of size 𝐷𝐹𝑥𝐷𝐹, a kernel size of 𝐷𝐾𝑥𝐷𝐾, 𝑀 input channels, and 𝑁 output channels, the conventional convolution has a computational cost of 𝐷𝐹·𝐷𝐹·𝐷𝐾·𝐷𝐾·𝑀·𝑁. In contrast, depthwise convolution has a cost of 𝐷𝐹·𝐷𝐹·𝐷𝐾·𝐷𝐾·𝑀 and together with the pointwise convolution, the total cost of a depthwise separable convolution can be written as 𝐷𝐹·𝐷𝐹·𝐷𝐾·𝐷𝐾·𝑀+ 𝐷𝐹·𝐷𝐹·𝑀·𝑁. As stated in [7], MobileNets use 3x3 depthwise convolutional filters with 8 to 9 times fewer computations compared to standard convolutions at only a small reduction inaccuracy.

In addition, MobileNetV2, published in 2019 [8], is based on an inverted residual structure and further decreases the number of required operations significantly while retaining the accuracy. The paper proposes a novel layer type, called inverted residual with linear bottleneck. As input, the module takes a low-dimensional compressed representation, which is first expanded to a higher dimension and filtered with a lightweight depthwise convolution. Then, using a linear convolution, the obtained features are projected back onto a low-dimensional representation.

Network Pruning

This section gives a brief overview on the chosen pruning approach [12]. As already mentioned, network pruning is a possibility to further compress neural networks. Typically, this is done by searching, finding, and deleting unimportant weights or complete feature channels. Afterwards, the pruned model is re-trained for several epochs in order to compensate for the accuracy drop caused by the pruning step.

In weight pruning, individual weights are deleted or set to zero. As a simple criterion, the smallest absolute weights could be chosen. However, many other approaches have already been proposed in the literature. In contrast, in channel pruning, complete channels are deleted. Again, a simple criterion for determining which channels should be removed could be the minimal sum of absolute channel weights.

In literature, different examples can be found for weight pruning, channel pruning, hard pruning, or soft pruning [12-16].

Figure 2 shows a model prior (left) and after pruning (right). As can be seen, the center element has been removed. As a consequence, all input and output connections of the deleted element also have to be removed. Thus, channel pruning within a specific layer can also influence subsequent layers.

Figure 3 compares different pruning strategies and the resulting sparsity. While the sparsity is very irregular incase of weight pruning (left), it is much more regular and organized incase of filter pruning (right). As a consequence, although the number of weights is reduced, pruning individual weights often results in even slower inference models. This is caused by the fact that the target hardware is typically not optimized for irregularly sparse structures. However, the drop in accuracy is comparatively small, since weight pruning allows for a very accurate pruning selection. In contrast, the drop in accuracy is typically higher incase of filter pruning. Thus, the re-training step is particularly important when pruning entire filters.

In [12], the method we selected for the later discussed experimental results, the average percentage of zero activations (APoZ) is proposed as pruning criterion. According to [12], APoZ is defined to measure the percentage of zero activations of a neuron after the ReLU mapping, thus after the activation layer. For that, let 𝑂𝑐(𝑖) denote the output of the c-th channel in the i-th layer. Its APoZ value is written as

with 𝑓(∙)=0 if false and 𝑓(∙)=1 if true. Further, 𝑀 denotes the dimension of the output feature map and 𝑁 denotes the number of used test samples in order to measure the activations.

Thus, as can be seen, the APoZ criterion simply counts how often a neuron or a filter is zero after activation. Since the value gets normalized by the output size and the number of samples, a simple threshold between 0 and 1 can be used in order to control the pruning process. Note that choosing a higher threshold leads to fewer weights or filters being removed.

Please note that choosing a specific pruning method should always depend on the final target platform or on given project requirements. Thus, having the full control over the network pruning process is an essential part of optimizing AI applications for embedded systems.

AI Ecosystem

This section discusses our AI Ecosystem, providing a complete framework covering all steps, ranging from model generation, training, and network pruning to model deployment. In the next section, the final transfer to the desired target platform is exemplarily shown for a Xilinx MPSoC.

While there are many potential deep learning frameworks out there, we decided to focus on the likely most future-proof Tensorflow/Keras for a start. The Ecosystem wraps around the actual framework and allows functional expansion with custom layers, models, re-usable functions, and tools. It grows with new use cases and applications, remaining a standard workflow when it comes to model generation, dataset compilation, training, optional pruning, and final model deployment.

Figure 4 shows a basic concept of the workflow with our AI Ecosystem which will be discussed in more detail in the following subsections.

Model Generator

For model generation, a model can be selected from a database. This typically includes well-known models proposed in literature. Alternatively, a fully customized model can be defined. For adapting the model to the actual target application, custom layers may be used from the ecosystem’s layers namespace, maybe also for pruning, as for example required for the AutoPruner method [16]. For configuration, the Google intermediate format protobuf is used. Using a python script create_model.py, a model file is created, including both the network topology and its parameterization. Further side information is represented in a model configuration file. Depending on the model generation, the resulting h5 file might already include pre-trained weights.

The model generation is illustrated in Figure 5.

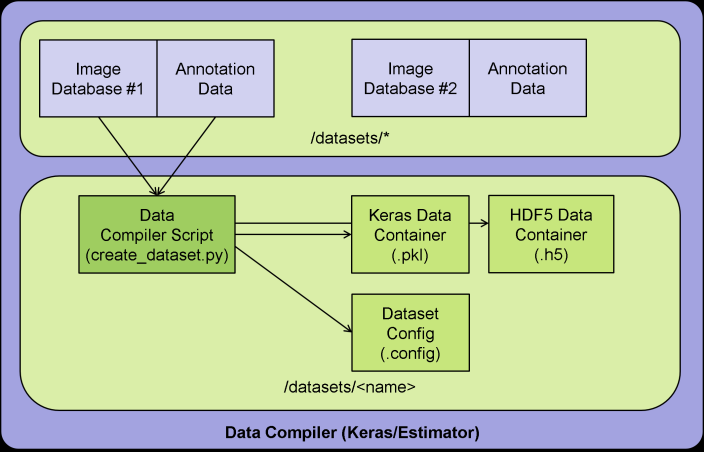

Dataset Compiler

Besides the general network structure, as discussed in the previous subsection, the used datasets can be also configured. For that, the desired database with corresponding annotation data can be selected. Datasets might be designed individually, using own image data, or they can be combined with publicly available data. Within the python script create_dataset.py, the datasets can be sub-divided into training and validation sets. Finally, the creation results again in a configuration file, describing important side information, and a data container either in pkl or h5 format. While the pkl format only provides image paths, the h5 format directly includes the image data. Thus, the container format clearly differs in size and can be chosen depending on the individual training environment.

The dataset configuration is illustrated in Figure 6.

Training

To initialize training, a session is created by the script create_session.py, which includes the model and the dataset. A session contains training-specific settings that condition model training, such as learning rate scheduler parameters, batch size, number of epochs, and so forth.

The training itself is controlled by the python scripts train.py. Various Keras callbacks, for example for adapting the learning rate, computing the mean average precision as accuracy metric, or getting different tensorboard log files, might be activated. The training process stores model checkpoints after each epoch, allowing for a later continuation of the training process.

The model training is illustrated in Figure 7.

Workflow for Xilinx ZCU102 Evaluation Kit

After discussing the main idea behind the Solectrix-internal AI Ecosystem, this section covers the basic workflow for integrating the AI Ecosystem and implementing the network inference on an edge device. As target hardware for a model example, the Xilinx ZCU102 evaluation kit has been chosen, as it carries a powerful Xilinx MPSoC device capable to include even multiple DPU cores. The workflow, illustrated in Figure 8, is roughly discussed in the following.

As already described in the previous section, network training requires the basic model structure together with proper training data. Within the AI Ecosystem, the trained network is represented as an hdf5 (.h5) file. After training and optional pruning, the network is deployed, meaning that all elements which are only required for training but not for the later network inference are removed. The deployment results in a Tensorflow frozen graph model. Next, the frozen graph is fed into the Vitis AI (VAI) Tensorflow Translation Tools. Through an inspection command vai_q_tensorflow inspect, the network’s topology can be verified, ensuring that all operators are supported by the Xilinx DPU core. Then, the network is quantized for an 8-bit representation. Quantization requires another dataset, which is used by the VAI quantizer tool vai_q_tensorflow quantize to optimize the quantization parameters.

The Deep Learning Processor Unit (DPU) IP core is configured and implemented into the FPGA image using Xilinx Vivado or Vitis – depending on the chosen workflow. Then, the hardware handoff file (hwh), generated by Vivado/Vitis is fed into VAI. The tool dlet is used to extract the DPU configuration parameters from the hwh file. Finally, for compilation, the Vitis AI Compiler vai_c_tensorflow is used in order to translate the Tensorflow network format into a binary blob file that can be executed by the DPU.

After compiling the network structure, the desired application can be implemented within Vitis and the compiled network is integrated into the application.

Using Petalinux as operation system and its generated sdk.sh host compiler environment tools, the application is finally cross-compiled for the target hardware. Please note that details on the exact Petalinux configuration are not within the scope of this article.

Experimental Results

This section shows some experimental results and confirms the basic principle of the workflow discussed above

Model training

As a working example, we decided to create a fully customized MobileNetV2 SSD (single shot multibox detector) [17] that recognizes our Solectrix company logo regardless of its orientation, scale, and background. For illustration, an example image is shown in Figure 9. The model uses an unusually large 1024x1024x3 pixel input image to allow for high resolution detection on 6 resolution scales. We included 12 MobileNetV2 blocks with a maximum depth of 512 filters per layer. The inference model includes the whole feature extractor backbone and convolutional layers used by the SSD detectors. The SSD box results are later decoded in the embedded ARM CPU.

The used dataset consists of 503 images. Across the images, the number of detectable logos varied from 1 to 40. For evaluation, the dataset was split into training (~95 %) and validation data (~5 %). Obviously, the number of images is much too small for a real project-driven application. However, the dataset is suitable enough to serve as an illustrative workflow example.

Initially, the designed model consists of 880124 parameters.

For data augmentation, the following operations were used: photometric distortion, random crop, random scale, random flip, and random box expand.

The model was trained for 120 epochs, with 1000 iterations per epoch. During training, the learning rate was adjusted within the range of 0.001 to 0.03. Both, the training and validation loss closely reached a converged state and the average precision ended up at 0.9 for the training dataset and 0.98 for the validation dataset.

Model Pruning

After the model was trained, it is technically ready for deployment. The optional pruning step was carried out to show the impact of parameter reduction in comparison to the detection performance. As pruning method, the above-mentioned APoZ criterion [12] was selected.

The basic strategy is to adjust the APoZ threshold to remove most inactive filters of convolutional layers. As an activity profile of the entire network must be worked out over the full training dataset, it is beneficial to prune over small groups of Conv2D layers in tiny delta cycles. After each cycle, a fine-tuning step is required to allow the model to compensate removed filters by tuning remaining filters. This normally takes only a few training iterations as the impact to the model after each cycle is relatively small. After each iteration, the model is validated using the average precision metric. It allows supervising the pruning progress and enables decision making, which pruning stage might be chosen for deployment.

In our example, the APoZ threshold was varied from 0.9 down to 0.25 in multiple iterations. Relevant Conv2D layers were identified and split up into 9 layer groups. Further, the pruning tool constrained the number of remaining filters to multiples of 4 as consideration of the inference target architecture to achieve high usage of parallel DPU multiplier resources. This is just one aspect to include the target inference platform into the optimization strategy.

Figure shows the reduction of parameters over the pruning progress in comparison to the model’s average precision. As the validation dataset is very small in our case, it does not reflect the overall performance of the desired application very well. Therefore, we also used the train dataset to measure model accuracy in comparison. The progress shows plausible behavior. The more convolutional filters were removed, the smaller the model became. Every dot in the plot represents a saved pruned model.

For final deployment, we have chosen a model that was shrunk to a number of 404052 parameters, which is about 45.9% of the original model, while the average precision is close to the original one (train set: 0.898, validation set: 0.883). Going further would lead to a massive unacceptable accuracy drop.

The whole iterative pruning process only took about the same amount of time as the model training itself. It is therefore an essential optimization tool.

Figure 1 shows the distribution of the APoZ values (activity profile) of selected Conv2D layers of the trained and the pruned model. The APoZ value is given on the y-axis, while the number of filters is given on the x-axis. As described by the figure legend, different Conv2D layers are shown in different colors. As already mentioned above, the number of filters per layer was limited to 512, corresponding to the maximum value on the x-axis. The values per layer were sorted in decreasing order. While an APoZ value of 0 corresponds to 100 % activation, values near 1 indicate filters rarely being activated and therefore not being relevant for the final output of the model.

Comparing both models, two effects can be observed. First, by pruning, the number of filters is reduced to some degree, which is the actual purpose of pruning. The maximum number of filters per layer is 224 after pruning, compared to the previously chosen 512 filters for some layers. Second, the maximum APoZ values are also decreased, which shows that the general degree of overall activity increased.

A second comparison between the trained and the pruned model is given in the following Figure 12. It shows an inference example on the ZCU102 evaluation board for the trained and the pruned network, respectively. As can be seen, both networks are able to convincingly detect the four Solectrix company logos of the example image from our website at different scales and angles within the test image. All detections are marked by their corresponding bounding boxes. In addition, the network confidence is given. It can be seen, that the confidence and the location of the detection boxes changed to some degree. However, despite the huge reduction of the model size, it still detects all four Solectrix logos

Finally, in a last experiment, the load of the utilized DPU core was evaluated. For that, two different configurations were used. First, a configuration with 512 operations per clock cycle, and second, a configuration with a maximum of 4096 operations per clock cycle. In the following, the two configurations are referred to as B512 and B4096, respectively. Now, with a DPU clock of 325 MHz, the maximum performance is 166.4 GOP/sec and 1331.2 GOP/sec for B512 and B4096, respectively.

In the following, the inference time was measured, using the DPU core profile mode. Figure 1 compares the performance and the runtime for the two considered DPU configurations. While the performance of B512 is shown in green, the performance of B4096 is shown in blue. As can be seen, the total inference time for B512 is about 68 ms. In contrast, the inference time for B4096 is roughly 15 ms. Thus, the runtime difference factor between the two configurations is about 4.5 and it clearly differs from the theoretically optimal factor of 8.0. In addition, it can be observed that the performance is not constant but also varies for the different network layers.

Figure 14 finally compares the performance between the trained and the pruned model in case of the B4096 DPU configuration. While the trained model, again, is shown in blue, the pruned model is shown in orange. In the figure, two interesting facts can be observed. First, the inference time of the pruned model is about 5.5ms smaller compared to the unpruned and purely trained model. This corresponds to a reduction in time of about 38.4 % and thus, again, it clearly differs from the 54.1% reduction of parameters that has been observed for the pruned model. Thus, a reduction in the number of parameters does not automatically lead to the same reduction in inference time on the target hardware. Second, for some layers, and this especially holds for early network layers, the pruning leads to a clear reduction in parameters but no reduction in inference time. Thus, for those layers, it might be beneficial to be excluded from the pruning in order to profit from the maximum filter depth, as long as the number of parameters is not the main optimization criterion.

As a consequence, it might be beneficial to profile the inference time of differently designed networks prior to model training. By doing so, a model can be found that achieves approximately optimal performance on the configured DPU core structure.

Summary & Outlook

This work summarizes the efforts made by Solectrix aiming at implementing an artificial intelligence (AI) approach on an embedded platform. After explaining some basic network models and pruning methods, the Solectrix AI Ecosystem, the company-internal tool, was discussed, and the workflow for using a trained network on a ZCU102 evaluation board was briefly described. Finally, experimental results emphasized the impact of the DPU configuration and the pruning on the resulting inference time on the target hardware.

As seen in Figure 11, the pruning efforts in the first interval, which shows the actual DPU performance on the first Conv2D layer, did not reduce inference time at all. This may be caused by technical constraints, such as bandwidth limitations or parallelization properties of the Xilinx DPU core. With model optimization focusing on improved latency, the reduction of depth was not beneficial. The layer depth could possibly even be increased without any impact on inference time. Other layers could instead be driven to an increase in DPU usage after pruning, which would lead to better utilization of DPU computing resources and therefore to a shorter processing time.

Solectrix is currently investigating in a systematic fashion how CNN operations should be parameterized to allow for maximized DPU usage possible. This target-driven optimization strategy would include knowledge of the Xilinx DPU properties, or that of any other embedded target platform, to make use of maximized possible computing power. Our pruning strategy would refine a model to the optimal “sweet spot” of the underlying architecture.

Due to our results, we are convinced, that pruning should be a mandatory step during embedded AI application development.

For more information, please visit www.solectrix.de

References

[1] S. Han, H. Mao, W. Dally, „Deep Compression: Compressing Deep Neural Networks with Pruning, Trained Quantization and Huffman Coding“, 2016

[2] L. Meng, J. Brothers, “Efficient Winograd Convolution via Integer Arithmetic”, 2019

[3] K. Simonyan and A. Zisserman, „Very Deep Convolutional Networks for Large-Scale Image Recognition“, 2015

[4] K. He, X. Zhang, S. Ren, J. Sun, “Deep Residual Learning for Image Recognition”, 2015

[5] F.N. Iandola, S. Han, M., Moskewicz, K. Ashraf, W. J. Dally, K., Keutzer, “AlexNet-Level Accuracy with 50x Fewer Parameters and <0.5MB Model Size”, 2017

[6] X. Zhang, X. Zhou, M. Lin, J. Sun, “ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices”, 2017

[7] A. G. Howard, M. Zhu, B. Chen, D. Kalenichenko, W. Wang, T. Weyand, M. Andreetto, H. Adam, “MobileNets: Efficient Convolutional Neural Networks forMobile Vision Applications”, 2017

[8] M. Sandler, A. Howard, M. Zhu, A. Zhmoginov, L.-C. Chen, “MobileNetV2: Inverted Residuals and Linear Bottlenecks”, 2019

[9] M. Tan, B. Chen, R. Pang, V. Vasudevan, M. Sandler, A. Howard, Q.V. Le, „MnasNet: Platform-Aware Neural Architecture Search for Mobile“, 2019

[10] A. Howard, M. Sandler, G. Chu, L.-C. Chen, B. Chen, M. tan, W. Wang, Y. Zhu, R. Pang, V. Vasudevan, Q. V. Le, H. Adam, “Searching for MobileNetV3”, 2019

[11] T. Yang, A. Howard, B. Chen, X. Zhang, A. Go, M. Sandler, V. Sze, H. Adam, “Netadapt: Platform-aware neural network adaptation for mobile applications”, 2018

[12] H. Hu, R. Peng, Y.W. Tai, C.K. Tang, “Network Trimming: A Data-Driven Neuron Pruning Approach towards Efficient Deep Architectures”, 2016

[13] H. Mao, S. Han, J. Pool, W. Li, X. Liu, Y. Wang, W. Dally, “Exploring the regularity of sparse structure in convolutional neural networks”, 2017

[14] J.H. Luo, H. Zhang, H.Y. Zhuo, C.W. Xie, J. Wu, W. Lin, “ThiNet: Pruning CNN Filters for a thinner net”, 2018

[15] Y. He, G. Kang, X. Dong, Y. Fu, Y. Yang, “Soft Filter Pruning for Accelerating Deep Convolutional Neural Networks”, 2018

[16] J.H. Luo, J. Wu, “AutoPruner: An End-to-End Trainable Filter Pruning Method for Efficient Deep Model Inference”, 2019

[17] W. Liu, D. Anguelov, D. Erhan, C. Szegedy, S. Reed, C.-Y. Fu, A. Berg, “SSD: Single Shot MultiBox Detector”, 2016

About Thomas Richter

Thomas Richter received the master's degree in information and communication technology and a PhD from Friedrich-Alexander-University Erlangen-Nürnberg (FAU), Erlangen, Germany, in 2011 and 2016, respectively. Since 2018, he works as a senior algorithm engineer at Solectrix in the area of image and video signal processing. Currently, his special focus is on machine learning applications as well as on designing classical object detection and tracking methods in the automotive area.

About André Köhler

Andre Koehler is an imaging expert at Solectrix who works in various research and development areas such as image sensor calibration, algorithm design, IP implementation, systems integration, prototyping, workflow automation, and evaluating new methods and technologies to bring new camera solutions to life. He has 17 years of professional experience in the design of embedded cameras on various FPGA-based platforms. He has a master's degree in microsystems technology and lives in Nuremberg, Germany.