Introduction Tutorial to the Vitis AI Profiler

Current Status:

- Tested with Vitis AI 1.3, Profiler 1.3.0, Vivado 2020.2

- Tested on the following platforms: ZCU102, ZCU104

Introduction:

This tutorial introduces the user to the Vitis AI Profiler tool flow and will illustrate how to Profile an example from the Vitis AI runtime (VART). This example will utilize the Zynq MPSOC demonstration platform ZCU104.

The Vitis AI profiler is an application level tool that helps detect the performance bottlenecks of the whole AI application. This would include pre-processing and postprocessing functions together with the Vitis AI DPU kernel while running a neural network. The user will see the complete AI data pipeline displayed across a common time scale and determine where improvements can be made. Additionally, read and write accesses to DDR memory are also captured to obtain a system-wide perspective.

Low-performing functions can be evaluated and considered for execution on different accelerators such as the AIE block or programmable logic to reduce the CPU's workload. The Profiler version 1.3.0 supports both Alveo HBM Accelerator cards (DPUCAHX8) and Zynq UltraScale+ MPSoC (DPUCZDX8).

Below are the following steps we will follow to demonstrate the Vitis AI Profiler:

- Setup the Target hardware and Host computer

- Configure the Linux operating system for profiling using petalinux (if needed)

- Execute a simple trace VART Resent50 example

- Execute a fine grain trace using VART Resent50 example

- Import all Captured results in to Vitis Analyzer for observations

- Conclusions

Setup and Requirements:

- Host Computer Setup for Vivado

- To review the Profiler results you will require Vivado 2020.2 to run Vitis_Analyzer - Found Here

- Download the Xilinx Unified Installer for the Windows OS and run.

- Provide your Xilinx.com login details and install Vivado

- Note: The Profiler ONLY requires the Vivado Lab Edition. This will greatly reduce the amount of disk space required.

- Target Setup: LINK1 LINK2

- The above 2 hyperlinks provided an in-depth explanation to set up Vitis AI on the Host computer and different target hardware. For the purposes of this tutorial, you will only require the following steps:

- Download the SD card Image for the ZCU104 (HERE)

- Download Etcher from: https://etcher.io/ and install on your Host computer.

- Using the Etcher software to burn the image file onto the SD card.

- Insert the SD card with the image into the ZCU104.

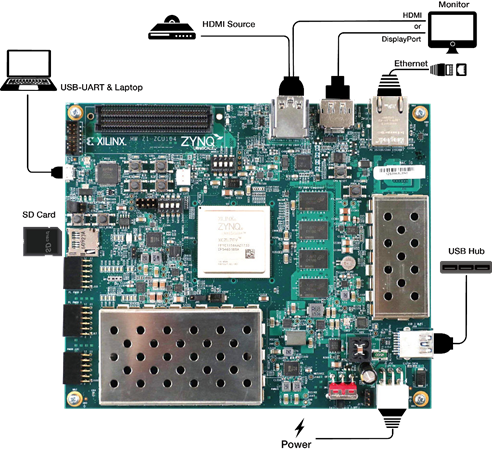

- Connect all required peripherals to the target (see figure#1).

- Plug in the power and boot the board.

- Setup your terminal program TeraTerm (Here) and connect to each of the terminal ports using the following connection settings.

- baud rate: 115200 bps

- data bit: 8

- stop bit: 1

- no parity

- Once you see the ZCU104 boot information you have located the correct serial port.

- Ensure your Host computer and target hardware are on the same LAN network. The ZCU104 is DHCP enabled and will be assign an IP by your router.

- Using the serial port type: ifconfig at the Linux prompt to determine the target’s IP address.

Once you have the target’s IP you can use a network communication tool such as MobaXterm – (Here) to setup a SSL connection to the target (user = root, password = root). This will provide a stable and high-speed connection to transfer files to and from the target hardware.

Note: The Vitis AI Runtime packages, VART samples, Vitis-AI-Library samples and models have been built into the board image. Therefore, you do not need to install Vitis AI Runtime packages and model packages on the board separately. However, users can still install models or Vitis AI Runtime on their own image.

PetaLinux Configuration (not required for this tutorial)

The below instructions can be used to enable the correct petaLinux setting for the Vitis AI profiler. The Xilinx prebuild SD card images come with these settings enabled. This section is provided as reference.

:petaLinux-config -c kernel

Enable the following settings for the Linux kernel.

- General architecture-dependent options ---> [*] Kprobes

- Kernel hacking ---> [*] Tracers

- Kernel hacking ---> [*] Tracers --->

[*] Kernel Function Tracer

[*] Enable kprobes-based dynamic events

[*] Enable uprobes-based dynamic events

Enable the following setting for root-fs

:petalinux-config -c rootfs

- user-packages ---> modules ---> [*] packagegroup-petalinux-self-hosted

Rebuild Linux

:petalinux-build

Simple Trace Example:

On the target hardware change directories to the VART examples as shown below:

root@xilinx-zcu104-2020_2:~/Vitis-AI/demo/VART#

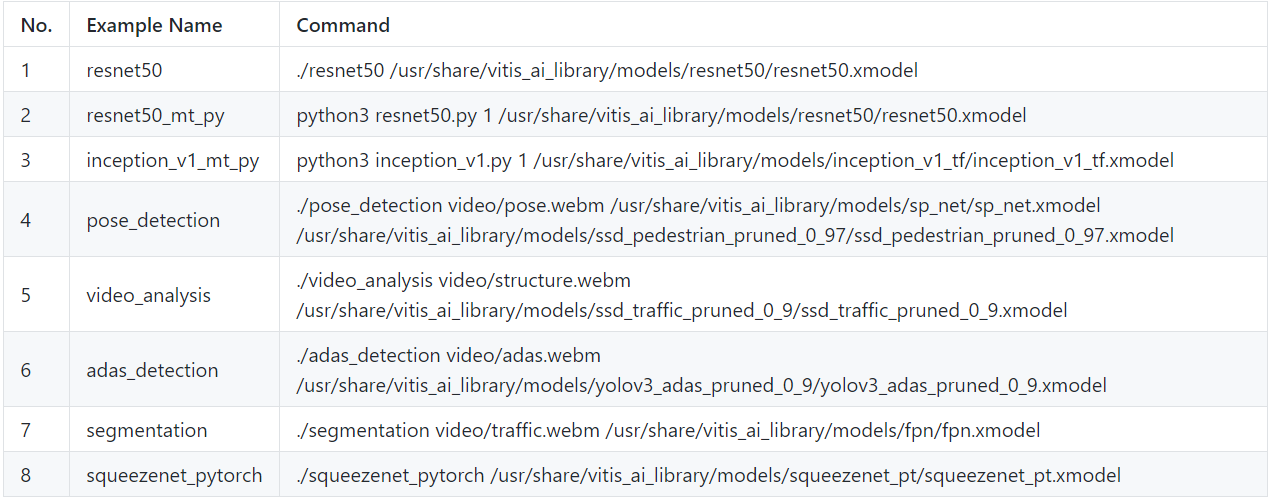

The VART examples come with many machine learning models you can run on the target hardware. The below table provides the various model names and execution commands to run the models on the target. In this tutorial we will run model #1 (resnet50) (see figure#2).

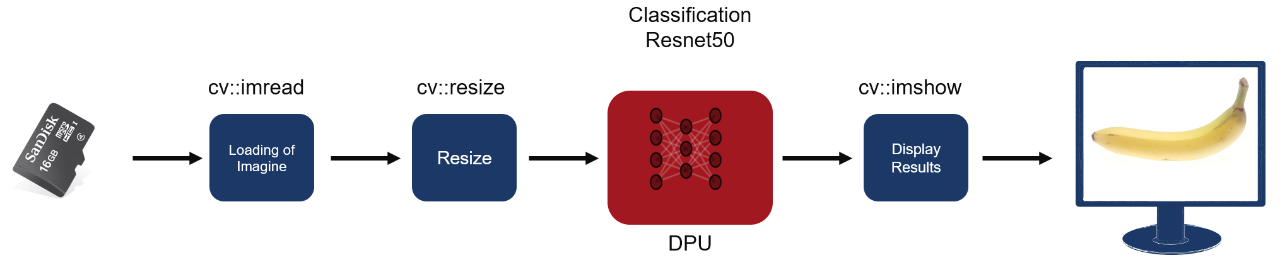

Locate the resnet50 application (main.cc) and open it in a text editor. This file can be found at the following location (Vitis-AI/demo/VART/resnet50/src). The main.cc program consists of the following key functions shown below. Figure#3 illustrates the data flow in the example design. Images are read from the SD flash card, resized 224x224, classified, and then displayed to the monitor.

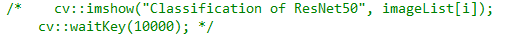

The application will run only one image, display it and then wait. To allow for fast image processing we will disable the cv::imshow function and delay. Search for the below cv:imshow function and comment it out. This will allow us to process many images in a short period of time.

Also, make note of the other additional functions (TopK and CPUCalSoftmax) processed by the CPU in this application. These functions can be traced by the Profiler and added to the end captured results.

Save and close your text editor.

Build the Application:

Enter the command: ./build.sh

Once complete you will see the compiled application in the same directory. The example design requires images to classify to be loaded onto the SD card. Using Mobaterm, drag and drop your images of choice to the following directory (/home/root/Vitis-AI/demo/VART). These are the images that will be classified by the example design. The resent50 neural network requires 224x224 resolution images. Other image sizes can be used as the data pipeline has an image scaler.

If you wish to build your application on the host machine, additional cross-compilation tools are required. Step by step installation instructions can be found in UG1414 (LINK).

Vitis AI Profiler Options:

The Vitis AI profiler option can be displayed by typing

:vaitrace –help

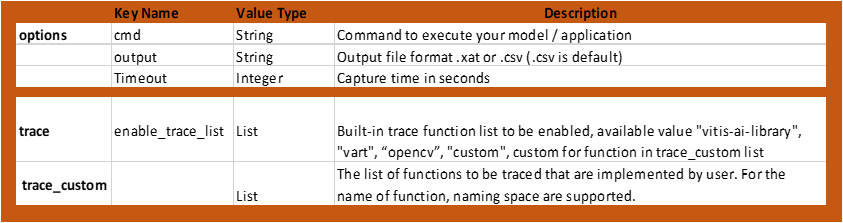

Figure # 4 are the key profiler options:

The profiler options are captured in a JSON file and executed by the profiler using the -c switch.

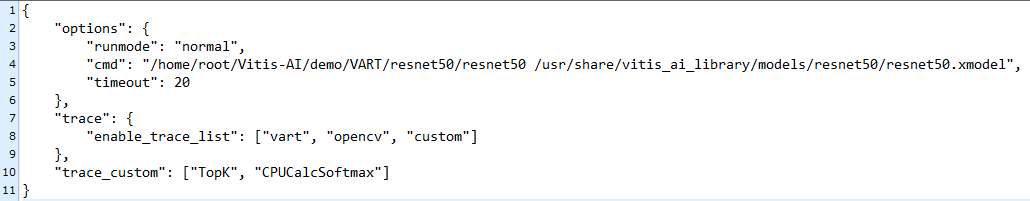

In the below JSON file (see figure#5) the runmode is set to “normal”. In this mode, the profiler will trace the resnet50 model as a single task. Meaning, all layers of the model are captured as a single timed event. This is a good option to compare the DPU performance against other processing blocks within the system’s data path.

The “cmd” command points to the resnet50 application and model for execution and profiling. This can be altered to trace other VART / Vitis Libs examples. The “trace” / “trace_custom” commands highlight the areas for the profiler to perform it’s captures. Note the trace_custom is profiling the 2 CPU functions found during the review of the main.cc application file.

Start Profiling:

At the Linux prompt begin profiling by typing the following command

root@xilinx-zcu104-2020_2:~/Vitis-AI/demo/VART/resnet50# vaitrace -c config.json

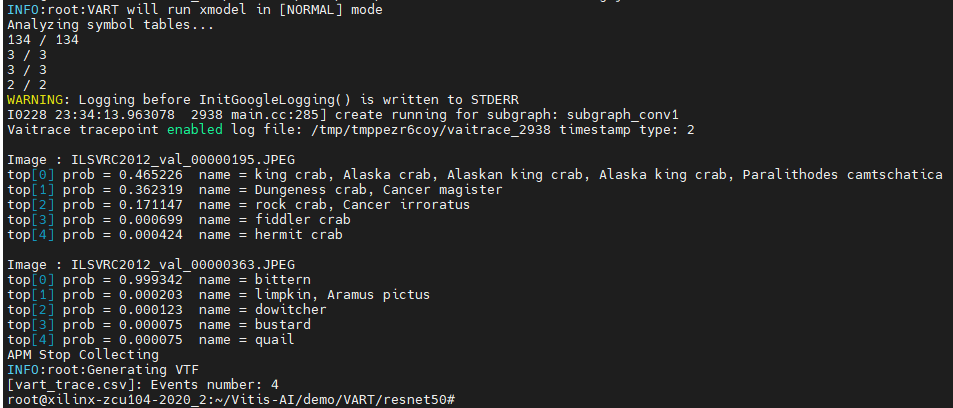

Figure #6 is an example of the profiler output. Different input images will yield different classification results.

Profiler Screen Output

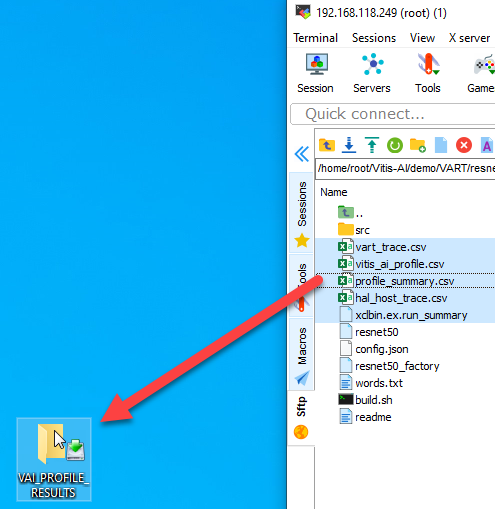

Move the output file to the Host for Analysis:

At the completion of the profiling run you will notice 5 files created containing your captured profiled results. Using MobaXterm copy these files to your host computer (see figure#7).

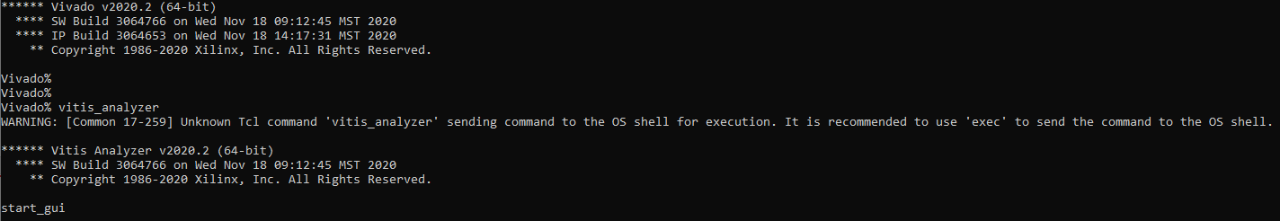

Start Vitis Analyzer using the Vivado TCL console:

On the host computer, use the Vivado TCL console to start the Vitis Analyzer. Ignore the unknown tcl command warning.

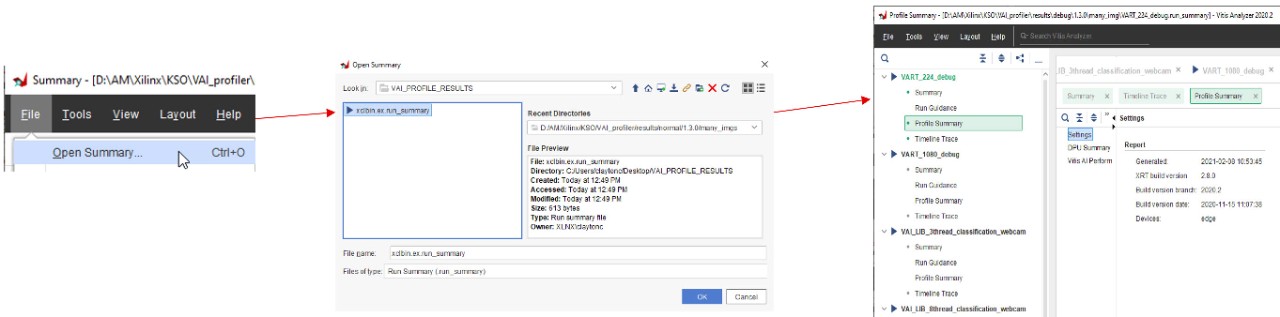

Load the captured results for Analysis

The captured results can be loaded into the Vitis Analyzer by using the following steps (see figure#8)

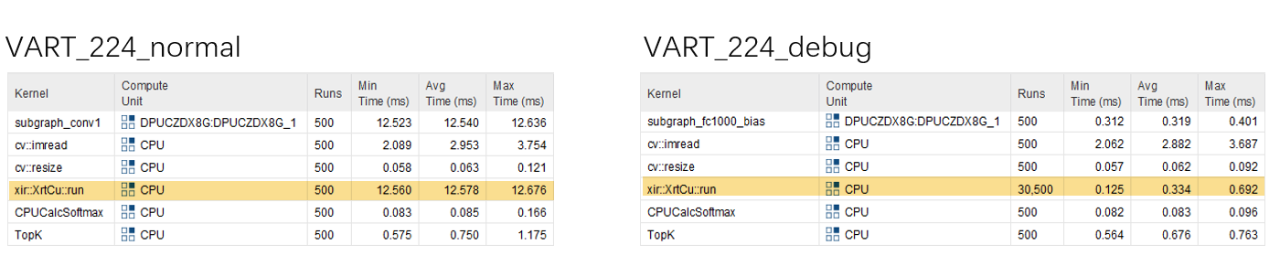

The Vitis Analyzer provides lots of status and version information under the Summary and Run Guidance section to allow for capture management. Under the DPU summary is where the profiler information is displayed (see figure#9). In this example, after processing 500 images we see the complete resent50 model averages approximately 12.5mS of execution time. Also displayed are the CPU functions that were executed as pre/post data processing blocks. In this case the input images were already the correct resolution (224x224) and the cv::resize function was lightly utilized.

The Vitis Analyzer GUI also provides bandwidth utilization on the Zynq MPSOC memory controller at the port level (see figure#10). By looking at our hardware design, we can determine which memory clients are assigned to each memory port and in turn understand their memory bandwidth consumption. In this example, we have two DPU with the assigned memory ports.

Dpuczdx8G_1 Dpuczdx8G_2

instr_port = S2(HPC0) instr_port = S2(HPC0)

data_ports = S3(HP0) and S4(HP1) data_ports = S4(HP2) and S5(HP3)

The Vitis Analyzer can enable/disable each DDR Port layer by using the gear icon in the upper righthand corner of the GUI. Using this feature lets you confirm each memory clients use during system-level execution.

Fine Grain Profiling Example:

To enable fine-grain profiling, update the JSON file and switch the runmode to “debug”. In this version of the profiler there is a known issue with this switch setting and a manual edit is required.

Open the following file “vaitraceDefaults.py” at the following location on the target.

/usr/bin/xlnx/vaitrace/vaitraceDefaults.py

Edit line 122 and switch the default runmode from (“normal” to “debug”) to switch modes manually. Save this change and close the file. This manual edit will force the profiler to perform fine grain profiling and iqnore what’s noted in the Json file. This issue will be addressed in the Vitis AI 1.4 tools.

Rerun the profiler on your model using the same command used in the simple example.

root@xilinx-zcu104-2020_2:~/Vitis-AI/demo/VART/resnet50# vaitrace -c config.json

In this mode, the profiler will profile each layer of the resnet50 model. To have a complete system profile we will still have a custom trace enabled on our Application functions (Topk and CPUCalcSoftmax).

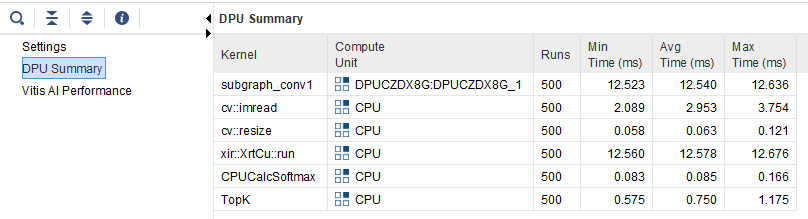

DPU layer by layer summary with CPU Functions

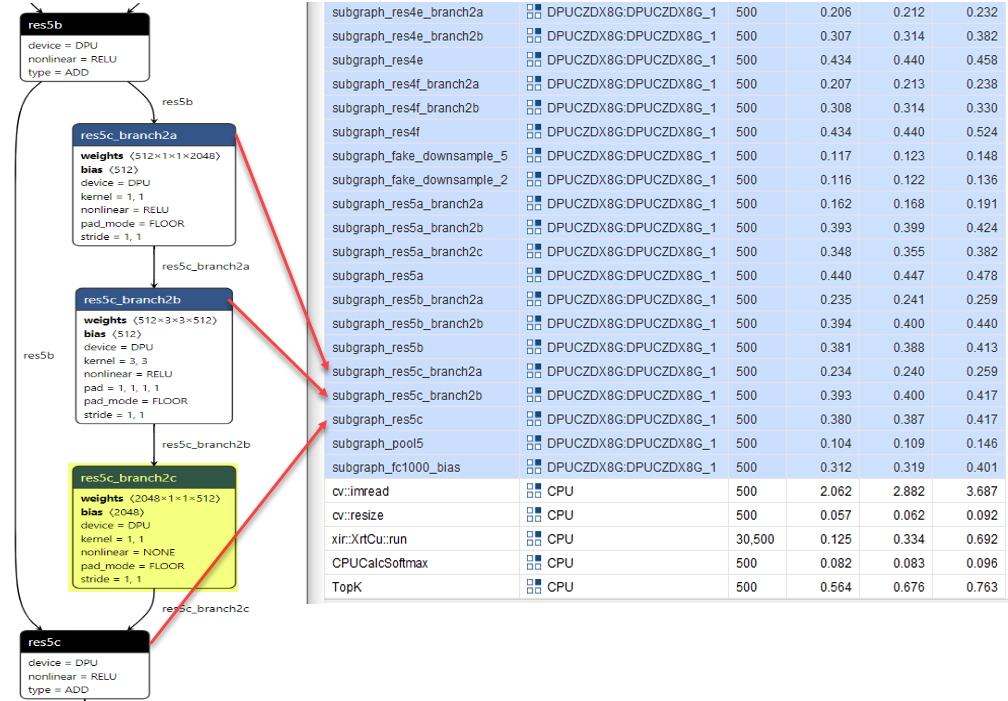

In the captured results note the resnet50 model has a total of 61 layers (see figure#11). Each layer is profiled and listed. Fine-grain profiling will allow for analysis of each layer’s performance during system-level execution.

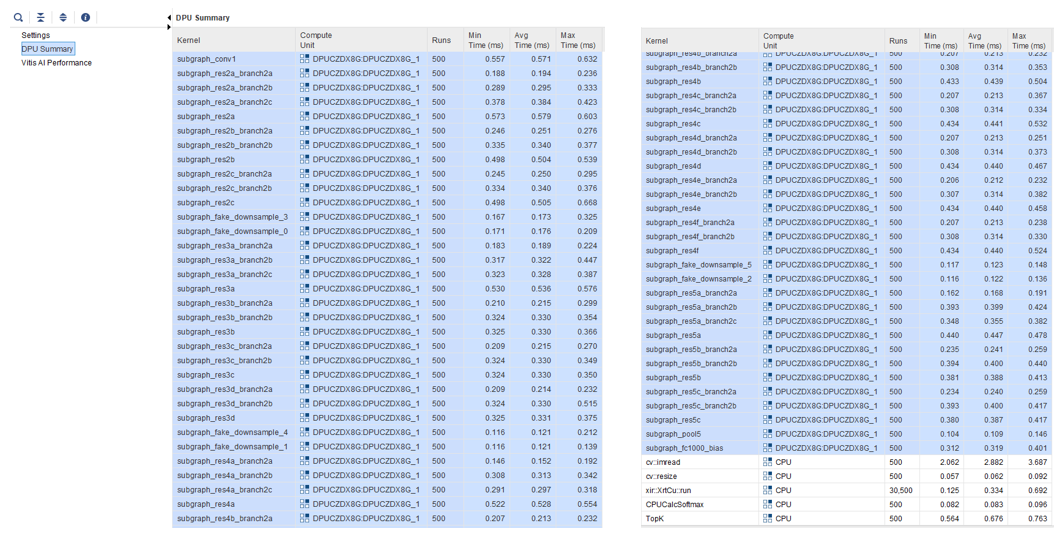

Profiler Observations:

In this tutorial, we completed two profile captures of the VART resnet50 model. Each capture provides slightly different information when reviewed. In Normal mode the model was processed as one element and yielded an average time of 12.578mS. While in debug mode the model has an average runtime of 0.334mS. Why is there such a large difference? (see figure#12)

It is important to remember in debug mode this time represents the time per each layer of the model. Therefore, we need to multiply this by the number of layers creating a total average time of 20.374 mS. This illustrates the additional overhead fine-grain profiler adds to the execution of the model.

Resnet 50 xmodel contains 61 subgraphs

Normal mode

- The compiler merges 61 subgraphs into 1 big subgraph to reduce overhead(scheduling and memory)

- Total Avg Time = 12.578mS

Debug mode

- One complete inference contains 61 subgraphs and calls xrtCu::run 61 times

- Each call = 0.334 mS x 61 calls

- Total Avg Time = 20.374mS

Vitis AI Tool Optimizations

When a model is processed by the Vitis AI tools it applies various optimizations and breaks up the graph into subgraphs for execution on the DPU. These optimizations can include layer / operator fusion to improve execution performance and DDR memory accesses. In figure#13 figure we see an example of these optimizations. In this case, Layer “res5c_branch2c” was joined with layer “res5c” and the Profiler doesn’t report it

Viewing the Xmodel

The Vitis AI tools produce an xmodel which can be viewed by Netron at different stages during compilation.

- Netron (LINK)

Data Resizing Example:

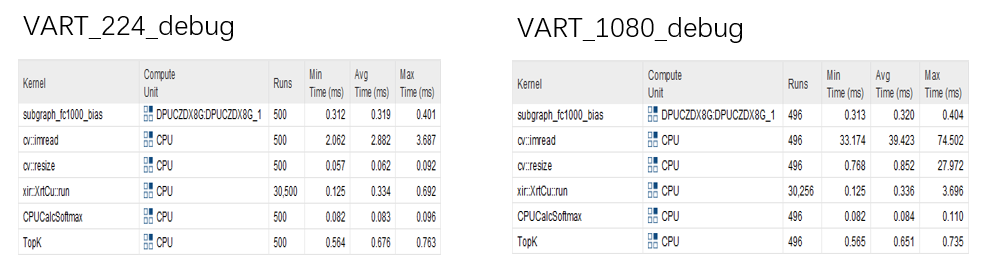

Below is an example of two fine grain profiler captures using the VART resnet50 model (see figure #15). In the first case we use image data that is 224x224 resolution. In the second case we increase our image size to 1920x1080 resolution (HD). When the smaller image was used the resize function (cv::resize) was not used as the image was already the correct size for the resnet50 model. When the HD image was classified the cv::resize function utilized more CPU time to resize the image. This is reflected in the below images.

If the cv::resize function is deemed the performance bottleneck it could be offloaded from the CPU and implemented in the PL logic using Vitis Libs (LINK).

Conclusion:

The Vitis AI profiler enables a system level view of your AI model within your system and

provides the following:

A unified timeline that shows running status of different compute units in the FPGA

- DPU tasks running status and utilization

- CPU busy/idle status

- Time consumption of each stage

Information about hardware while executing

- Memory bandwidth

- Real-time throughputs of AI inference (FPS)

Hardware information

- CPU model/frequency

- DPU kernel latency

Statistical information

- Time consumed at each stage of each processing block in the system

About Clayton Cameron

Clayton Cameron is a Senior Staff FAE based in Toronto. He joined Xilinx in 2000, supporting telecom customers in the Ottawa office. As an FAE, Clayton greatly enjoys helping customers and solving problems. He also enjoys the diversity of his position and the variety of challenges he faces daily. In his spare time, he lets off steam at the Dojo achieving his 3rd Blackbelt in Juko Ryu Tora Tatsumaki Jiu-Jitsu. At home, he loves to spend time with his wife and three children.