Sparse vs. Dense TOPS. What are they, what’s the difference, and what does it mean to me?

January 24, 2022

Up until very recently, any mention of TOPS always referred to dense TOPS. However, with the recent push to support zero-compression in sparse matrices, the term sparse TOPS has appeared. What is the difference between dense TOPS and sparse TOPS? And why should you care about sparsity? Let’s dive into these topics.

Sparsity

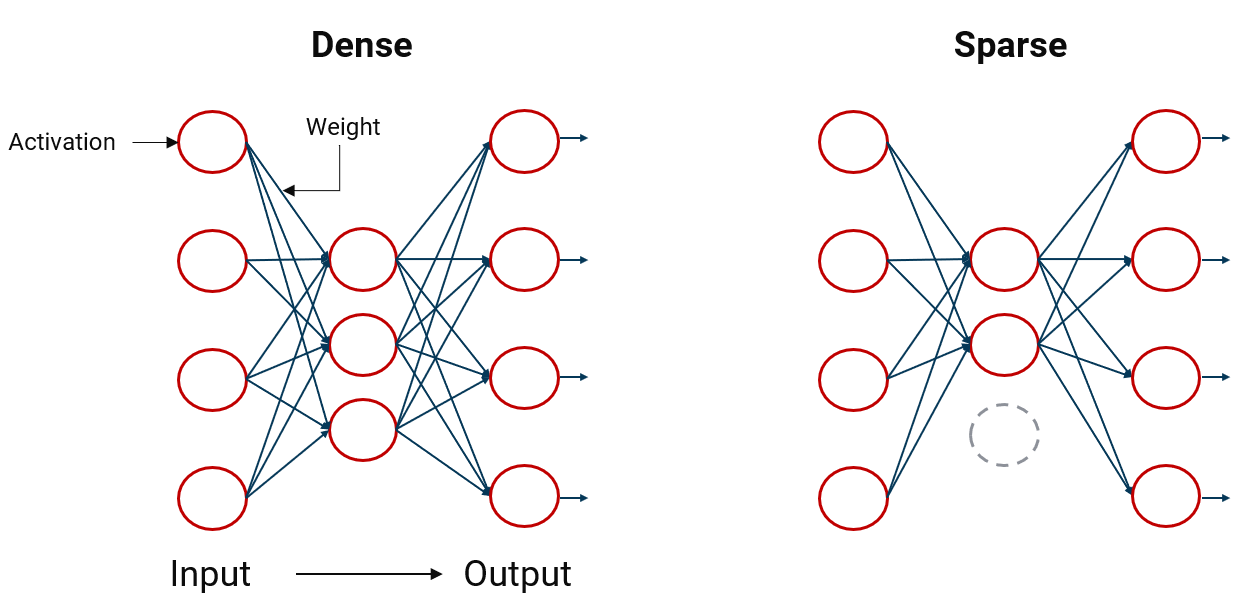

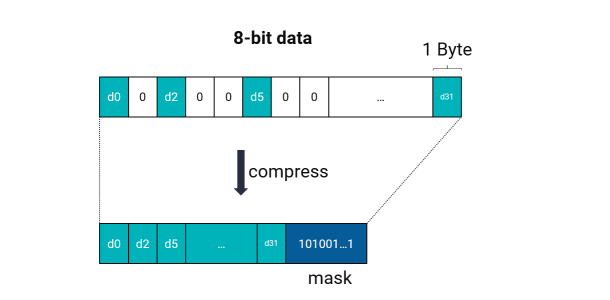

Artificial Intelligence (AI) is heavily dependent on Machine Learning (ML), and ML is almost entirely performed by multiplying matrices together. A matrix can represent an object, where nonzero values refer to pixels in an image, for example, and zero values represent blank space. These zero values can be compressed or eliminated, and that compression reduces the number of operations needed to multiply two matrices. The compression and elimination of these zero values is called sparsity.

Tera-Operations per second or TOPS is a rudimentary calculation where you simply assess the number of operations that your system can compute. TOPS can be determined by multiplying the number of operations per second by the clock frequency of the system. For example, a device that can perform 512 Multiply-Accumulate (MACs) operations per second running at 1 GHz has TOPS of 512 x 1GHz x 2 = 1024 TOPS. This number represents dense TOPS.

How to Calculate Sparse TOPS

The number above was calculated without taking into consideration the improvement in performance that can be realized if the zero values in the matrices are compressed. If half of the zeroes are removed, you reduce the number of unnecessary operations by 50%, which results in a performance improvement of 2X. This is the definition of sparse TOPS. A matrix that has been compressed to eliminate zero-values is a sparse matrix, whereas a matrix with zero and nonzero values is a dense matrix.

The Difference between Sparse TOPS and Dense TOPS

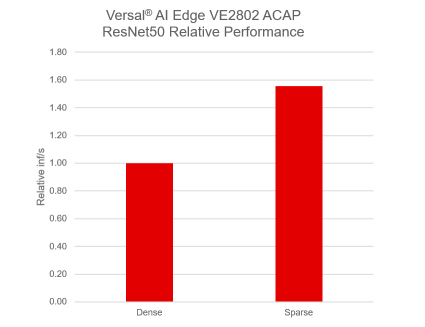

Sparsity is powerful because it can theoretically improve system performance by up to 2X. However, it’s important to understand the difference between sparse TOPS and dense TOPS. When comparing systems or devices, make sure you don’t fall into the trap of comparing dense TOPS to sparse TOPS. Also, the theoretical performance improvement usually cannot be implemented in a practical system, so take any performance claims with a grain of salt. Using ML networks like ResNet50, Yolov3, MobileNet, etc., reveals much more about the performance of any AI chip than TOPS.

Results with batch size = 18, INT8 precision

Versal AI Edge Devices and Their TOPS Numbers

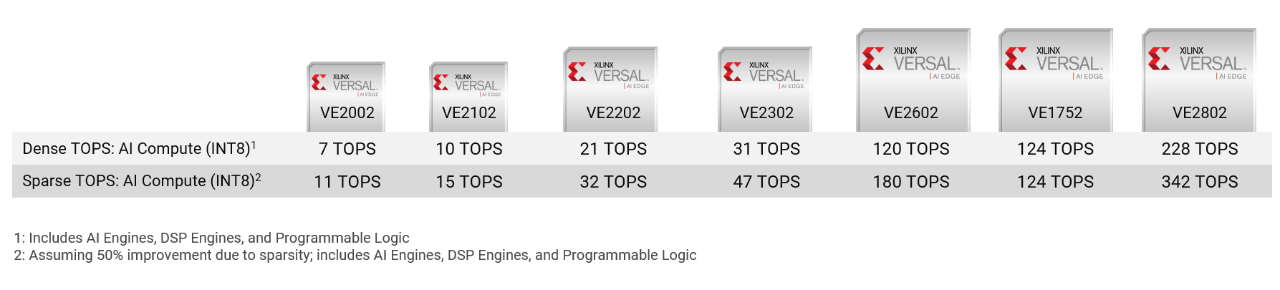

Sparsity support is one of the key features in Xilinx’s AI Engines for machine learning (AIE-ML), available in the Versal® AI Edge and Versal AI Core adaptive compute acceleration platforms (ACAPs).

The TOPS numbers provided for the Versal AI Edge series in the product selection guide, and all associated collateral use dense TOPS. Here is an estimate of sparse TOPS achievable in each of the Versal AI Edge series devices:

Next Steps

Join the early access program if you want to learn more about AI performance with Versal AI Edge series. Contact your local Xilinx representative or contact sales to get started.

Xilinx Xclusive Blog

-

- 准备呐喊助威吧:赛灵思将参加 BattleBots电视系列角逐赛

- Dec 07, 2021

-

- 50 多家领先的公司、大学和研究机构将在 Xilinx Adapt 2021 上发言

- Sep 01, 2021

-

- 嵌入式 AI 需要什么样的解决方案

- Sep 01, 2021

Adaptable Advantage 博客

Xilinx AI 和软件博客

-

- Vitis AI 2.0 现已推出!

- Jan 20, 2022

-

- 连获“双奖”,Xilinx AI 团队实力绽放国际视觉顶会

- Oct 08, 2021

-

- 您是否了解过赛灵思应用商店?评估和购买 Alveo 及 Kria SOM 解决方案的一站式商店。

- Sep 23, 2021